Talkyard on k8s

Updates

- Added notice about archiving talkyards-k8s repo as I am no longer using Talkyard (or for that matter any commenting solution). The content of this post might be outdated.

Talkyard is an open source software that provides discussion and commenting capabilities to sites. For example the comments on this very site are powered by Talkyard.

As the official Talkyard releases already comes containerized and works well as a Docker Compose deployment I figured it would be a fun project to make Talkyard run on k8s. This post will be a step-by-step guide on how to deploy Talkyard to a Kubernetes cluster.

If you couldn’t care less about k8s there’s already instructions on how to install Talkyard with Docker Compose over at the official Talkyard git repository debiki/talkyard-prod-one. Talkyard is also available as Software as a Service if self-hosting is not an option, see talkyard.io/plans.

Table of contents

- Talkyard-k8s Repository

- Prerequisites

- Workspace Setup

- Talkyard Configuration

- Persistent Storage

- Update kustomization.yaml

- Deploy to Cluster

- Access Talkyard on localhost

- Configuring Ingress

Talkyard-k8s Repository

I have setup a GitHub repository, ChrisEke/talkyard-k8s, which I intend to keep up-to-date with new Talkyard releases.

Edit: Well, that did not really pan out. The repo has now been archived due to lack of maintenance from my part as I am no longer using Talkyard and unable to stay up to date with the latest features.

For those already familiar with k8s there are suggested quickstart instructions in the README. The remainder of this post will cover a deployment in more detail.

Prerequisites

To follow along the following is needed:

kubectl

a Kubernetes Cluster with amd64 worker node(s). Unfortunately the container images are not yet arm/arm64 compatible.

Kustomize (kubectl v1.14 and upwards includes Kustomize with the

--kustomize/-k-flag, but frozen to version v2.0.3. My recommendation is a stand alone installation of the latest version as there are a good deal of new features and improvements in later versions.)

Sizing

This deployment is by default configured with minimal resource requirements. With all the different services combined 1.5GiB memory should be enough for a smaller community with limited concurrent updates.

The most memory intensive services are app and search, which both are Java Runtime based applications. Pre-configured values for Java Heap size are app=256MiB & search=192MiB.

To modify these values the easiest approach is to update the environment variable, either as patch or with Kustomize ConfigMapGenerator.

Kustomize example:

configMapGenerator:

- name: app-environment-vars

behavior: merge

namespace: talkyard

literals:

- PLAY_HEAP_MEMORY_MB="512"

- name: search-environment-vars

behavior: merge

namespace: talkyard

literals:

- ES_JAVA_OPTS="-Xms384m -Xmx384m"Workspace Setup

- Create workspace directory called

talkyard:

mkdir talkyard && cd talkyard- Create the initial

kustomization.yamlfile which will reference GitHub repository ChrisEke/talkyard-k8s as source for all base manifests.

?ref=v0.2021.02-879ef3fe1 specifies which Talkyard version to deploy and can also be omitted completely to use the latest stable version.

cat << EOF > kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: talkyard

resources:

- github.com/ChrisEke/talkyard-k8s?ref=v0.2021.02-879ef3fe1

EOF- Optionally we can now perform a dry-run to see the manifests of resources that will be deployed.

This will correspond to the manifests located in ChrisEke/talkyard-k8s/tree/v0.2021.02-879ef3fe1/manifests

kustomize build ./Which should fill up the terminal with a lot of kubernetes manifest specifications, example:

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: webserver

app.kubernetes.io/part-of: talkyard

app.kubernetes.io/version: v0.2021.02-879ef3fe1

name: web

namespace: talkyard- Create the talkyard namespace in the cluster:

kubectl create namespace talkyardTalkyard Configuration

Most of the application specific configuration is done in file play-framework.conf.

An up to date template can be found in repository debiki/talkyard-prod-one/blob/master/conf/play-framework.conf There’s also support for using ENV_VARS.

play-framework.conf

- Download latest

play-framework.conftemplate:

curl -SLO https://raw.githubusercontent.com/debiki/talkyard-prod-one/master/conf/play-framework.conf- Configure Site Owner Email and optionally Outbound Email Server Settings in

play-framework.conf. Example:

...

- talkyard.becomeOwnerEmailAddress="your-email@your.website.org"

+ talkyard.becomeOwnerEmailAddress="chriseke@ekervhen.xyz"

...Secrets

Two secrets needs to be present for Talkyard to run:

play-secret-keyused by the application to sign session cookies (keep this safe and do not store in plaintext or checked into version control).postgres-db-passwordfor database access.

- Generate random input for

play-secret-key:

head -c 32 /dev/urandom | base64

VqWhJDQ3EjZRKpU3eL+ctRGwgou2SQN3RUPz4+kNLsg=- Store it as a Kubernetes Secret in talkyard namespace:

kubectl create secret -n talkyard generic talkyard-app-secrets \

--from-literal=play-secret-key='VqWhJDQ3EjZRKpU3eL+ctRGwgou2SQN3RUPz4+kNLsg='- Set and create the database password:

kubectl create secret -n talkyard generic talkyard-rdb-secrets \

--from-literal=postgres-password='changeThis!'Persistent Storage

Configuring and setting up persistent storage is optional while testing as the application will start up fine anyway. Note that if no persistent storage is configured all content and configuration made within Talkyard will be lost on pod restart. For production the following claims and volumes should be configured:

| component | volume name | mount path | access mode |

|---|---|---|---|

| web | user-uploads | /opt/talkyard/uploads | ReadWriteMany |

| app | user-uploads | /opt/talkyard/uploads | ReadWriteMany |

| cache | cache-data | /data | ReadWriteOnce |

| rdb | db-data | /var/lib/postgresql/data | ReadWriteOnce |

| search | search-data | /usr/share/elasticsearch/data | ReadWriteOnce |

Example Database Storage

Here is an example for configuring the database storage. Attributes and parameters may differ depending on available storage classes in the cluster.

In this case I am using Longhorn to setup the persistent volume claim:

- Create a persistent volume claim:

cat << EOF > ./rdb-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: talkyard-rdb-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: longhorn

resources:

requests:

storage: 10Gi

EOF- Create a patch file for mounting the volume to rdb pod:

cat << EOF > ./patch-rdb-volume.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: patch-rdb-volume

spec:

strategy:

type: Recreate

template:

spec:

containers:

- name: rdb

env:

- name: PGDATA

value: /var/lib/postgresql/data/pgdata

volumeMounts:

- name: db-data

mountPath: /var/lib/postgresql/data

volumes:

- name: db-data

persistentVolumeClaim:

claimName: talkyard-rdb-pvc

EOFNote that I’ve included strategy

Recreateto allow the volume to detach, e.g. during upgrade of the image version, and environment variablePGDATAto use sub-folder pgdata to avoid postgres start-up error when data directory is not empty.

Update kustomization.yaml

The directory structure of the workspace should now look like this:

talkyard/

├── kustomization.yaml

├── patch-rdb-volume.yaml

├── play-framework.conf

└── rdb-pvc.yamlEdit kustomization.yaml to include the configuration, PVC and patches:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: talkyard

configMapGenerator:

- name: app-play-framework-conf

files:

- app-prod-override.conf=play-framework.conf

resources:

- github.com/ChrisEke/talkyard-k8s?ref=v0.2021.02-879ef3fe1

- rdb-pvc.yaml

patches:

- path: patch-rdb-volume.yaml

target:

name: rdb

kind: DeploymentI’m using the Kustomize built-in

configMapGeneratorto create a ConfigMap object ofplay-framework.conf. This makes it easier to introduce changes to the configuration as the generated ConfigMap will have a hash value included in the name. Which guarantees that the pod will mount the most up to date configuration.

Deploy to Cluster

To trigger the deployment run:

kustomize build . | kubectl apply -f -If everything went well kubectl get pods -n talkyard should display the pods with status Running:

NAME READY STATUS RESTARTS AGE

app-777b8d5689-pbwfp 1/1 Running 0 4m

cache-5d6ddfd55-tg46c 1/1 Running 0 4m

rdb-76d86b7c86-sh287 1/1 Running 0 4m

search-66b6b6fc86-69tnh 1/1 Running 0 4m

web-694745b5fd-wtwq8 1/1 Running 0 4mAccess Talkyard on localhost

By default the included nginx web server has strict configuration on what headers to allow and pass on to back-end services, which is good, as it decreases the attack surface for HTTP header injections.

But it also causes some trouble when port-forwarding web service on an unprivileged port as the port number is stripped from the request URL-header for additional assets.

- A temporary workaround is to modify the nginx configuration in the

webpod:

kubectl exec -n talkyard web-694745b5fd-wtwq8 -- \

sed -i 's/proxy_set_header Host \$host/proxy_set_header Host \$http_host/' \

/etc/nginx/server-locations.conf

kubectl exec -n talkyard web-694745b5fd-wtwq8 -- nginx -s reload- Now port-forward service

web:

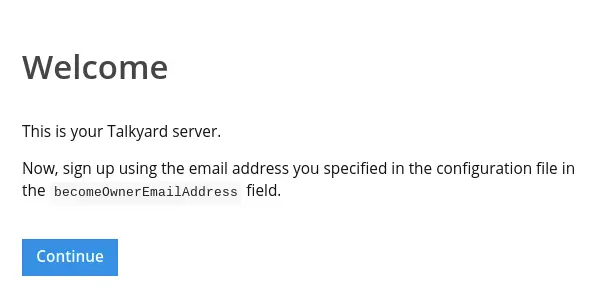

kubectl port-forward -n talkyard svc/web 8080:80- Access http://localhost:8080/ in a browser and the Talkyard Welcome page should appear:

Proceed by signing up with the same email used earlier in play-framework.conf: talkyard.becomeOwnerEmailAddress. Continue with initial configuration of community name, creating topics and general layout etc.

When done remember to delete the web pod to reset to default $host headers:

kubectl delete -n talkyard pod webConfiguring Ingress

Now we’re ready to expose Talkyard to the world via an Ingress object. In this example I’m using Traefik to automatically configure TLS certificate with Let’s Encrypt. Configuration of Traefik is beyond the scope of this post, but there are plenty of resources on the subject available online.

The IngressRoute will terminate HTTPS and then forward all traffic to web service via HTTP on port 80.

- In file

play-framework.confchange the hostname to a proper domain name and enable HTTPS to ensure that generated URI:s from the application will use the correct protocol:

...

- talkyard.hostname="localhost"

+ talkyard.hostname="talkyard-example.ekervhen.xyz"

- talkyard.secure=false

+ talkyard.secure=true

...- Create the IngressRoute manifest:

cat << "EOF" > ./ingressroute.yaml

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: talkyard-ingressroute

spec:

entryPoints:

- websecure

routes:

- match: Host(`talkyard-example.ekervhen.xyz`)

kind: Rule

services:

- name: web

port: 80

tls:

certResolver: letsencrypt

EOF- Update

kustomization.yamlto includeingressroute.yamlas a resource:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: talkyard

configMapGenerator:

- name: app-play-framework-conf

files:

- app-prod-override.conf=play-framework.conf

resources:

- github.com/ChrisEke/talkyard-k8s?ref=v0.2021.02-879ef3fe1

- rdb-pvc.yaml

- ingressroute.yaml

patches:

- path: patch-rdb-volume.yaml

target:

name: rdb

kind: Deployment- Re-deploy with Kustomize and kubectl:

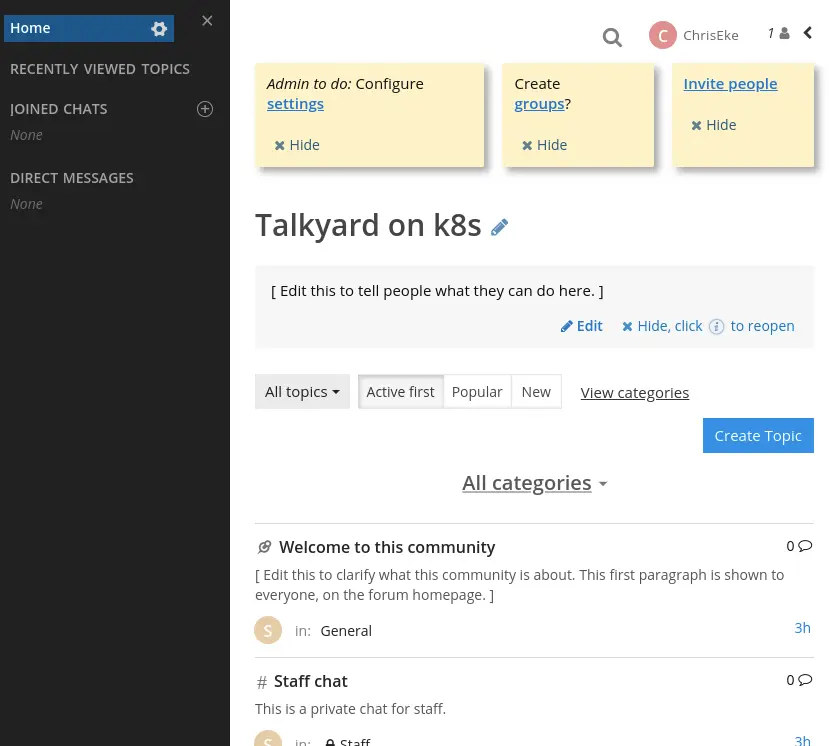

kustomize build . | kubectl apply -f -With ingress and a valid certificate provisioned we should now be greeted by the Talkyard index page when accessing https://talkyard-example.ekervhen.xyz:

With Talkyard successfully deployed the fun part of building a community can begin!

I want to highlight that there are most likely some additional tweaks and configuration required before this can be viewed as “production-ready”. Things like High Availability, Scalability and Backup Strategy are definitely worth looking into.

Questions? Suggestions? Errors spotted? Feel free to comment with Talkyard below contact me!